Domo, I’ve recently seen remarkable progress in video generation AI technology! Have you heard about the newly released FLF2V model of Wan2.1? FLF stands for FirstLastFrame, and it can generate intermediate frames by specifying two images as the first and last frames, and its performance is quite good. The essence of this model is that with some ingenuity, it is possible to create looping animations with no sense of incongruity! Since the gguf model has already been released, I will introduce how to use it with ComfyUI, and finally, I will show a workflow that improves on the previous workflow!

About this model

I2V had a lightweight 1.3B-480p model, but this model only has a 14B-720p model, so for vrams under 16g, the gguf version is almost mandatory, (I do it too)

treatment

To use it, you must first update ComfyUI to Ver. 3.19 or higher. If you have not yet done so, please do so!

Model to be used

The official model is too large, so as usual, use the gguf model published by citi96! I use wan2.1-flf2v-14b-720p-Q4_0.gguf and put it in [ComfyUI/models/unet].

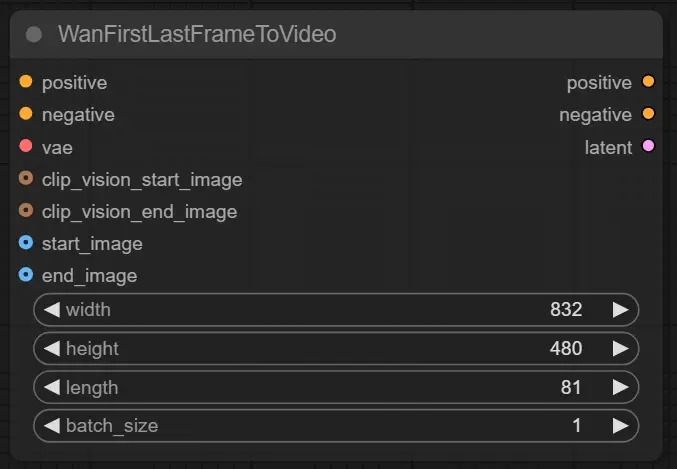

WanFirstLastFrameToVideo

Compared to the WanImageToVideo node, there is one more input for clip and image…

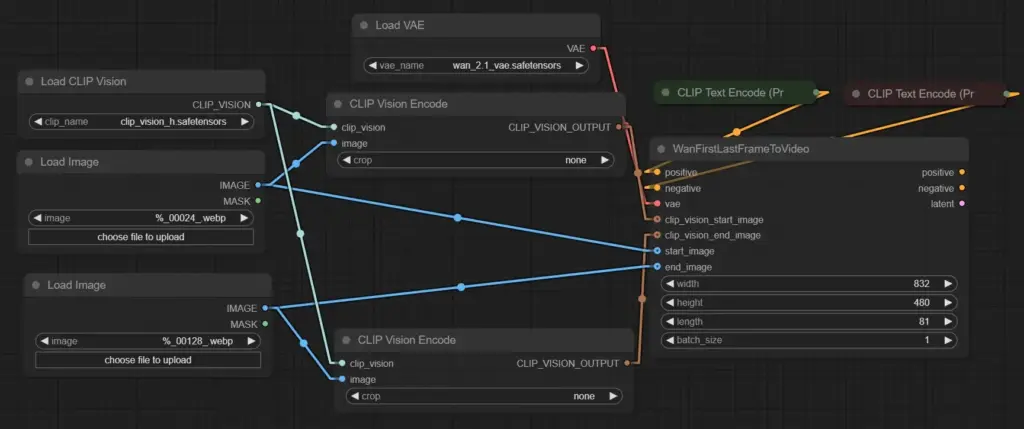

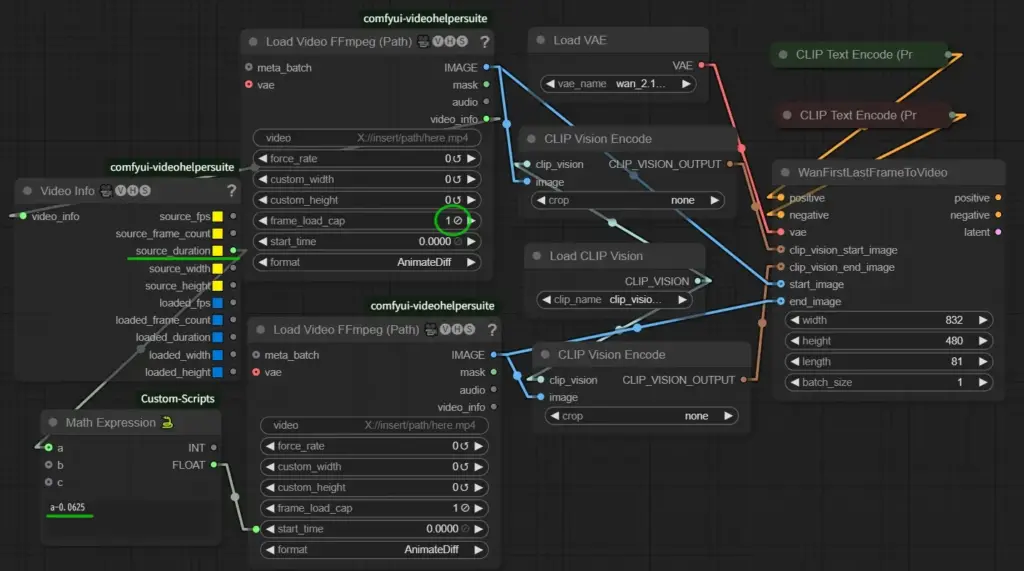

In this way, the image is aligned with the CLIP Vision Encode used for start and end.

The rest is the same as the basics.

Comparison with I2V model

This model takes longer from the beginning to the end, the opposite of the normal I2V model, so the log shows the average time to the end, but it takes much longer than that, roughly 40% more time than I2V.

How to make a loop video

The method is simple: bring the last frame of the video you want to loop to the beginning and the first frame to the end, in the case of a video created with I2V, the last frame can be the image used for it (if it is too much trouble to connect them), but it is more convenient later to complete the video alone. The image below is an example of using the video generated by wan2. 1.

This method uses two Load Video nodes, the value circled in green, which reads only the first frame, and a Math Expression calculation based on the video source seconds from the videoinfo, subtracting that value since one frame is 0.0625 seconds. Since one frame is 0.0625 seconds, subtract that value and connect it to the start_time of the second Load Video, so that only the last frame is loaded.

About custom nodes in comfyui-videohelpersuite

I think comfyui-videohelpersuite has two custom nodes for loading videos, and I used to use that one in my workflow that I published before, but I had a problem with the color shifting as I repeatedly used videos! I replaced it with this ffmpeg node and the color problem disappeared! So I recommend this node if you use it.

Generation example

Below is an example of a video generated by my workflow

Use this image to generate it in I2V, then generate it in FLF2V in the last and first frames of that video

These two videos were stitched together and converted to 60fps here

What do you think? I think it loops nicely! (Please check out some of them on civitai as well! (Some of them are a bit…eh…weird, so please be careful and watch carefully)

Improved workflow including FLF2V

I have uploaded my improved workflow, including FLF2V, to Civitai, and will note how it has been improved.

Wan2.1 advanced workflow including low VRAM speed-up to 60fps | なんなこなここ

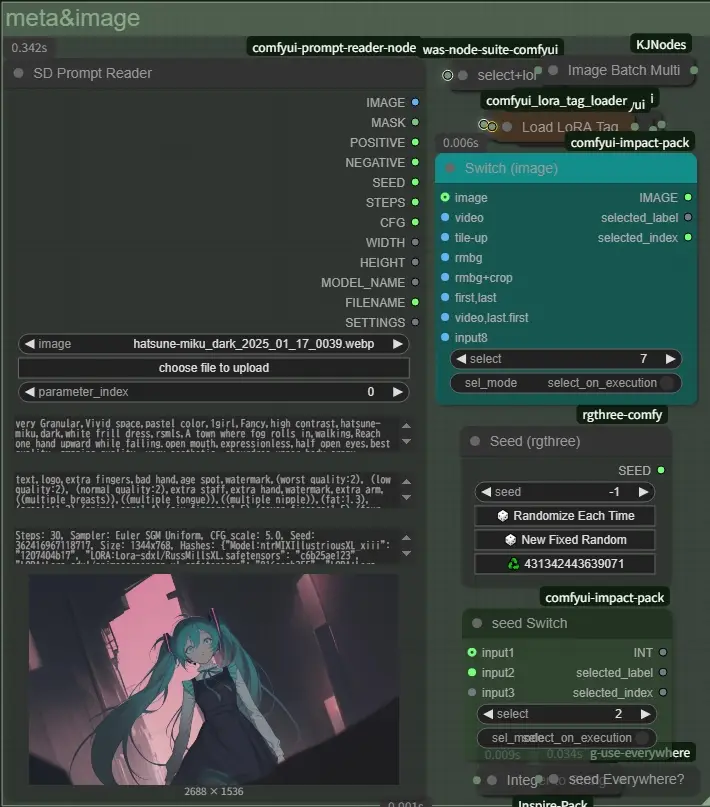

Ability to instantly switch between I2V and FLF2V

When one of these two groups is muted or turned on, the other will be in the opposite state, i.e., you can switch between them with one button. The model selection is done in the Unet node, which must be opened if you want to change it.

Image Selection

I removed “up-video” because I didn’t feel it was effective,

first,last.first loads the first frame image from this image, and the last frame from the loadimage on the left.

video,last.first loads the last frame of the video selected in the video-path group as the first image and the first frame as the second image, and the filename saved is the video name, not the image name.

If the seed switch has the same naming convention as the video created in this workflow, set it to 2 to use the seed value stored in the video name, otherwise set it to 1.

Crop

Crop or rotate a specific area of an image and then pass it through an rmbg node,

Color Match

Added Color Match node to the main and merge groups, this will reduce uneven color differences in the before and after videos.

Major changes and notes

This workflow has been updated to adapt to the latest environment of ComfyUI, so please install the version of ComfyUI and version 1.17.11 of comfyui_frontend_package.(It should be installed automatically when the ComfyUI is updated.)

About cg-use-everywhere custom node

The change in omfyui_frontend_package affected many custom nodes, this cg-use-everywhere is one of them, now it works with the latest version, but version 5.0.10 might be better (at startup). (You will get a warning when starting up, but if you want to connect vae to a node such as Video Combine, you need this version, otherwise the image connection will be broken every time, probably because, as you guessed, it is displayed as image, but internally it is working as latent, and that is why it is stuck in the reference method of the latest version. If you don’t use it, it is not a problem with the latest version.) If you don’t use it, the latest version is fine! Thanks to the author for updating it as needed!

Custom nodes that must be upgraded other than those listed above

Other minor changes

・The CRTLike effect in the pixel group was notorious for freezing when processing many frames, so the Meta Batch Manager was added to process 64 frames at a time, and an error message was repeatedly displayed when trying to output the filename name, showtext was removed.

・Removed switch for frame in video-path group, added video2con

・Replaced with Load Video FFmpeg (improved color issues)

・Added switches to mute and activate upscale, combined, film, and pixel groups simultaneously

Finally.

How is it? It took me a long time to rework the workflow this time, reloading nodes… connecting them… error… reloading… connecting them… error… and repeating… I hope you will try using this workflow to loop your favorite videos and experiment with it! See you soon!

コメント